In 2023, artificial intelligence (“AI”) entered our daily lives in meaningful ways. The latest data shows that four in five teenagers in the United Kingdom are using generative AI tools, while about two-thirds of Australian employees report using generative AI for work. At first, many people used these tools because they were curious about generative AI or wanted to be entertained. Now, people ask generative AI for help with studies, for practical advice, or use it to find or synthesize information. Other uses include getting help coding and making images, videos, or audio. Meanwhile, so-called “prompt whisperers” or prompt engineers offer guides on not just designing the best AI prompts, but even how to blend different AI services to achieve fantastical outputs.

AI uses and functions have also shifted over the past 12 months as technological development, regulation and social factors have shaped what is possible. Here is where we are at, and what might come in 2024 …

AI changed how we work & pray

Generative AI made waves early in the year when it was used to enter and even win photography competitions, and tested for its ability to pass school exams. ChatGPT, the chatbot that has become a household name, reached a user base of 100 million by February – about four times the size of Australia’s population. Some musicians used AI voice cloning to create synthetic music that sounds like popular artists, such as Drake, the Weeknd, and Eminem. Google launched its chatbot, Bard. Microsoft integrated AI into its Bing search. Snapchat launched MyAI, a ChatGPT-powered tool that allows users to ask questions and receive suggestions.

GPT-4, the latest iteration of the AI that powers ChatGPT, launched in March. This release brought new features, such as the ability to analyze documents or longer pieces of text. That same month, corporate giants like Coca-Cola began generating ads partly through AI, while Levi’s said it would use AI to create virtual models. The now-infamous image of the Pope wearing a white Balenciaga puffer jacket went viral. A cohort of tech evangelists also called for an AI development pause.

Amazon began integrating generative AI tools into its products and services in April. Meanwhile, Japan ruled there would be no copyright restrictions for training generative AI in the country. In the United States, screenwriters went on strike in May, demanding a ban of AI-generated scripts. Another AI-generated image, allegedly of the Pentagon on fire, went viral. In July, worshippers experienced some of the first religious services led by AI. In August, two months after AI-generated summaries became available in Zoom, the company faced intense scrutiny for changes to its terms of service around consumer data and AI. The company later clarified its policy and pledged not to use customers’ data without consent to train AI.

In September, voice and image functionalities came to ChatGPT for paid users. Adobe began integrating generative AI into its applications like Illustrator and Photoshop. And by December, we saw an increased shift to “Edge AI,” where AI processes are handled locally, on devices themselves, rather than in the cloud, which has benefits in contexts when privacy and security are paramount. Meanwhile, the EU announced the AI Act, the world’s first law governing AI.

Where to from here?

Given the whirlwind of AI developments in the past 12 months, we are likely to see more incremental changes in the next year and beyond. In particular, we expect to see changes in these four areas …

Increased bundling of AI services and functions – ChatGTP was initially just a chatbot that could generate text. Now, it can generate text, images, and audio. Google’s Bard can now interface among Gmail, Docs and Drive, and complete tasks across these services. By bundling generative AI into existing services and combining functions, companies will try to maintain their market share and make AI services more intuitive, accessible, and useful. At the same time, bundled services make users more vulnerable when inevitable data breaches happen.

Higher quality, more realistic generations – Earlier this year, AI struggled with rendering human hands and limbs. By now, AI generators have markedly improved on these tasks. At the same time, research has found how biased many AI generators can be. Some developers have created models with diversity and inclusivity in mind. Companies will likely see a benefit in providing services that reflect the diversity of their customer bases.

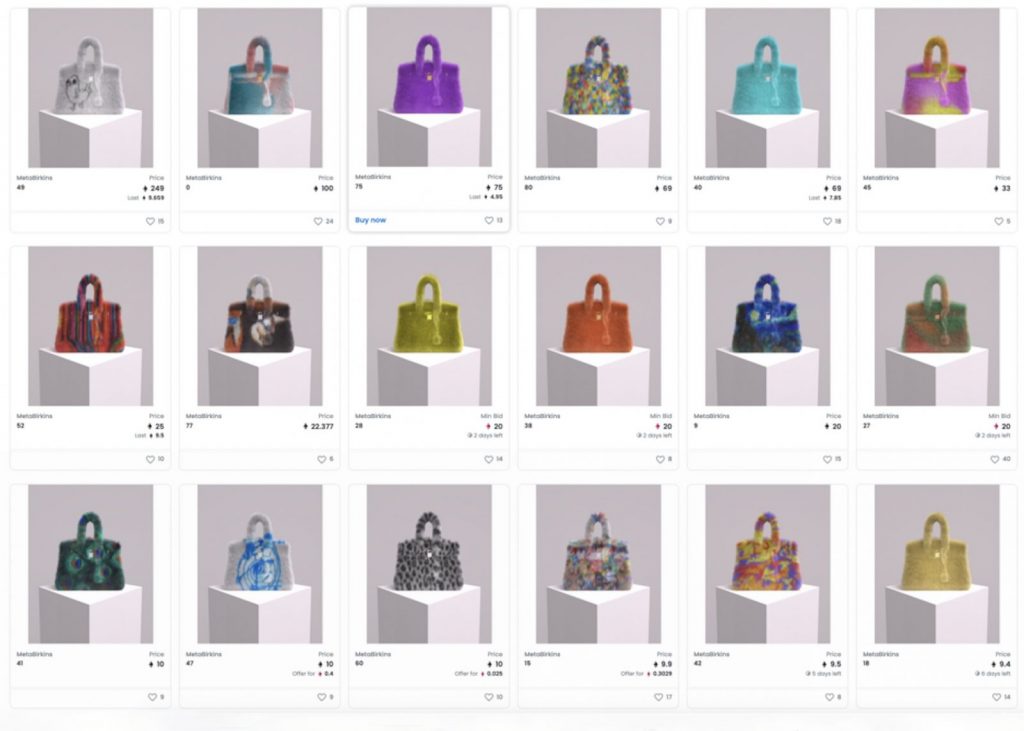

Growing calls for transparency and media standards – Various news platforms have been slammed in 2023 for producing AI-generated content without transparently communicating this. AI-generated images of world leaders and other newsworthy events abound on social media, with high potential to mislead and deceive. Media industry standards that transparently and consistently denote when AI has been used to create or augment content will need to be developed to improve public trust.

Expansion of sovereign AI capacity – In these early days, many have been content playfully exploring AI’s possibilities. However, as these AI tools begin to unlock rapid advancements across all sectors of our society, more fine-grained control over who governs these foundational technologies will become increasingly important. In 2024, we will likely see future-focused leaders incentivizing the development of their sovereign capabilities through increased research and development funding, training programs and other investments.

For the rest of us, whether you are using generative AI for fun, work, or school, understanding the strengths and limitations of the technology is essential for using it in responsible, respectful, and productive ways. Similarly, understanding how others – from governments to doctors – are increasingly using AI in ways that affect you, is equally important.

T.J. Thomson is a Senior Lecturer in Visual Communication & Digital Media at RMIT University

Daniel Angus is a Professor of Digital Communication at Queensland University of Technology. (This article was initially published by The conversation.)