Reface is at the center of a proposed class action complaint, with Kyland Young accusing the company behind the deepfake app of running afoul of California’s right of publicity law by enabling users to swap faces with famous figures – albeit without ever receiving authorization from those well-known individuals to use their likenesses. According to the complaint that he filed in a California federal court on April 3, Young asserts that Reface developer NeoCortext, Inc. has “commercially exploit[ed] his and thousands of other actors, musicians, athletes, celebrities, and other well-known individuals’ names, voices, photographs, or likenesses to sell paid subscriptions to its smartphone application, Reface, without their permission.”

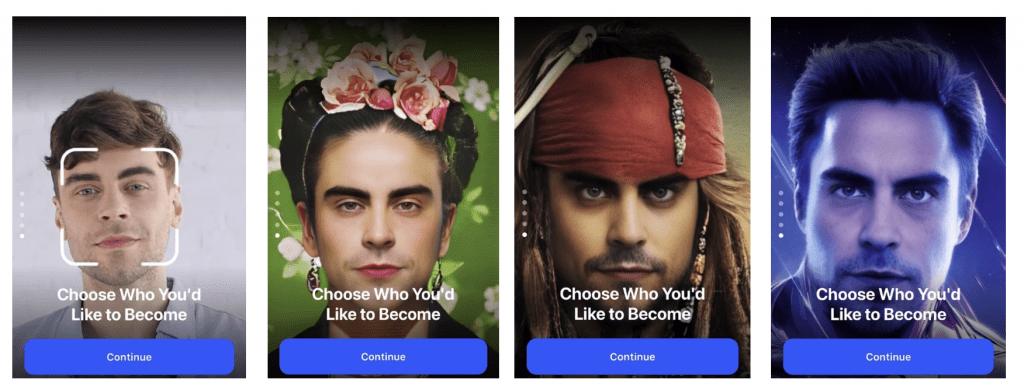

Setting the stage in his complaint, which he says is “not about the legality of deepfake technology or the creative ways Reface application users choose to use the [its] technology” and instead, is “about a company exploiting well-known Californians’ names, voices, photographs, and likenesses to pitch its product for profit,” Young states that by way of the Reface app, NeoCortext “allows users to swap their faces with individuals they admire or desire in scenes from popular shows, movies, and other viral short-form internet media,” thereby, resulting in the creation of deepfake imagery.

ICYMI: Deepfakes are a “class of synthetic media generated using artificial intelligence,” per MIT Technology Review. Merriam Webster describes this type of AI generated output as “an image or recording that has been convincingly altered and manipulated to misrepresent someone as doing or saying something that was not actually done or said.”

As for how Reface works: Young states that users can take or upload a selfie, which enables them to “swap” faces with “well-known real individuals and fictional characters,” which are depicted in the Reface “Pre-sets catalogue.” This catalogue is searchable, Young asserts, and “allows users to search for specific individuals they would like to become.” The catalogue includes images of Young, who claims that “Paying PRO Users can become Kyland Young, the finalist of season 23 of CBS’s Big Brother, and recreate his scenes from the television show.” Young argues that “even though [NeoCortext] profits off [his] and other class members’ identities, it neither sought nor obtained” consent to do so, and “certainly never paid [him] or other class members a dime in royalties.”

Given that California law recognizes an individual’s right of publicity, and prohibits companies from commercially using another person’s name, voice, photograph, or likeness without that person’s consent, Young alleges that NeoCortext violated his right of publicity – and the rights of other individuals – by failing to “ask him or others similarly situated for their consent to commercially exploit attributes of their identities” – such as images of them and their names – “in [its] teaser face swaps or the paid PRO version of the Reface application.”

Not only did NeoCortext deprive him and the other class members – i.e., “all California residents whose name, voice, signature, photograph, or likeness was displayed on a Reface application Teaser Face Swap or the PRO Version of the Reface application on or after April 3, 2021” – of compensation “in the form of a royalty or otherwise” in exchange for its use of their identities, by failing to secure authorization to use their likenesses, “NeoCortext deprived [them] of control over whether and how their [likenesses] can be used for commercial purposes.”

Young sets out a single cause of action, accusing NeoCortext of violating the California Right of Publicity Statute (Cal. Civ. Code § 3344). In addition to injunctive relief to enjoin NeoCortext from using his and the other class members’ “names, voices, signatures, photographs, or likenesses for commercial purposes,” Young is seeking monetary damages (“the greater of actual damages or $750 per violation to each class member” plus reasonable litigation expenses and attorneys’ fees), as well as certification of the proposed class action.

THE BIGGER PICTURE: The lawsuit comes amid rising concerns over the use of generative artificial intelligence (“AI”) tools to create deepfakes, particularly since text-to-image synthesis engines are more powerful than the generative adversarial network tech previously utilized to create deepfakes, according to UC Berkley computer science professor Hany Farid. Generative AI tools “are capable of creating nearly any image, including images that include an interplay between people and objects with specific and complex interaction,” he states, which poses potential problems not only due to the risk of abuse, but because legislation and regulation is (unsurprisingly) lagging far behind the advances being made in this space.

Sheppard Mullin’s James Gatto stated in a recent note that “responsible companies are taking proactive steps to minimize the likelihood that their generative AI tools inadvertently violate the right of publicity, [with] some examples of these steps including attempts to filter out celebrity images from those used to train the generative AI models and filtering prompts to prevent users from requesting outputs that are directed to celebrity based name, image, and likeness.

The case is Kyland Young v. NeoCortext, Inc., 2:23-cv-02496 (C.D. Cal.).