As technological innovations in e-commerce continue to explode, retailers are increasingly utilizing customer data to personalize customer experiences, prevent fraud, improve their services, and make money through third-party sales. A wide array of new data analytics tools allow retailers to study a vast array of information – ranging from users’ order history to their exact mouse movements – to better understand their customer base. With any new business strategy comes risk, and plaintiffs’ attorneys are seeking huge damages awards by using a number of novel data privacy theories to attack companies’ practices.

On top of that, legislators are (at times, very slowly) responding to concerns about how businesses use personal information by proposing new consumer data privacy laws that limit the collection and sale of personal information. Here is Part II of a two-part look (you can find Part I here) at some of the most prominent trends in data privacy litigation, highlighting the issues that companies should consider in order to avoid finding themselves on the receiving end of similar cases.

One hot litigation trend from last year – concerning “session replay” technology – has practically come to a halt. Although several dozens of these cases were filed in spring and summer 2021, none have been filed in recent months. This is likely due to the fact that one of these cases, Johnson v. Blue Nile, Inc., is currently pending in front of the U.S. Court of Appeals for the Ninth Circuit, and will resolve the question of whether the state wiretapping statutes at issue in these suits even apply to session replay technology.

Session replay technology allows a company to play back any visitor’s online session – including their clicks, typing, and scrolling – often in order to make sure that websites operate properly (such as after an update, or in response to a glitch), or to make the websites easier to navigate. These cases are normally filed under California, Pennsylvania, or Florida wiretapping statutes, based on the theory that when a consumer navigates a website, he or she is communicating with the online retailer, and that the vendors who offer session replay technology engage in “wiretapping” by intercepting those alleged communications without the customer’s consent.

Thus far, courts have generally granted defendants’ motions to dismiss on the basis that using session replay technology is not wiretapping. Specifically, they have ruled that: (1) online shopping is not a “communication” that can be wiretapped; (2) session replay technology vendors are not “intercepting” anything because they are directly involved in the consumer’s use of the website; and (3) there is no reasonable expectation of data privacy for consumers in the context of online shopping.

The court in Johnson v. Blue Nile, Inc. held that collecting customer data through session replay technology is not an unlawful “interception” of information because Blue Nile and its software vendor were parties to separate communications with the plaintiff. That is, there were direct communications between the vendor and the plaintiff, and separate direct communications between Blue Nile and the plaintiff, rather than a single communication between Blue Nile and the plaintiff that the vendor intercepted.

The Blue Nile case has been on appeal since August 2021, with the opening brief filed on December 1, 2021, and the answering brief not due until February 25, 2022. If the Nonth Circuit reverses the district court, then the wave of session replay cases is almost certain to return.

Biometric Privacy Suits Are Growing

All the while, more and more retailers have introduced virtual try-on tools that use biometric technology to recreate the fitting room experience for their online consumers. At the same time, many others use fingerprinting to track when employees clock in and out. As the popularity of these tools grow, so does the legal risk from the growing number of biometric data privacy lawsuits.

The majority of these lawsuits have been filed under Illinois’s Biometric Information Privacy Act (“BIPA”), which is the only state biometric privacy law to provide a private right of action. Passed in 2008, BIPA prohibits a business from “collect[ing], captur[ing], purchas[ing], receiv[ing] through trade, or otherwise obtain[ing]” a person’s biometric data – which plaintiffs have claimed include fingerprints, face and body scans, voice, typing style, and data regarding any other physiological or behavioral characteristics – without first providing written notice of the company’s biometric data collection, retention, and storage practices and obtaining written consent. In 2019, the Illinois Supreme Court held that individuals can bring claims under BIPA even if they have not been injured by the defendant’s failure to satisfy these notice and consent requirements.

BIPA also requires business to “store, transmit and protect” biometric data “in a manner that is the same as or more protective than” its treatment of other confidential or sensitive information, and imposes a blanket prohibition on “sell[ing], leas[ing], trad[ing] or otherwise profit[ing] from” a person’s biometric information, though other disclosures are permitted after obtaining informed consent.

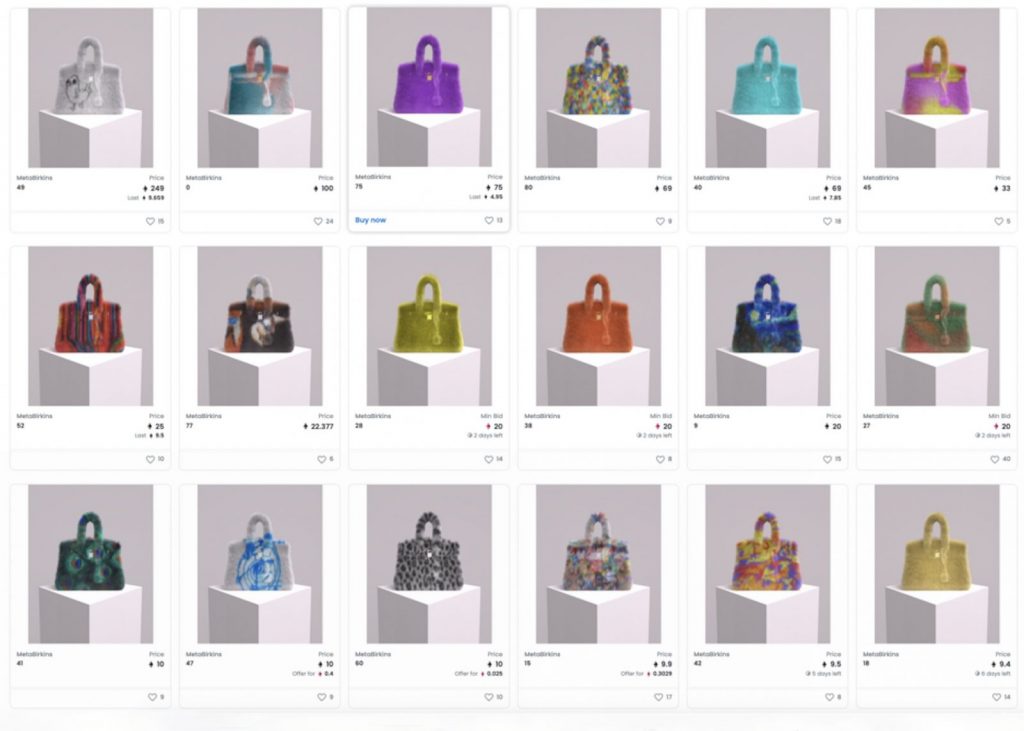

Under BIPA, consumers can sue for statutory damages up to $1,000 for each negligent violation and up to $5,000 for intentional or reckless violations, making it particularly attractive to plaintiffs’ firms. Likely for that reason, over 750 BIPA suits have been filed since its passage. These lawsuits typically allege that a business collected the plaintiff’s data without first obtaining adequate informed consent, even if in connection with a legitimate employment function, marketing purpose, or product feature. Many of these suits have targeted retailers that offer virtual try-on features (for example, Zenni Optical and Mary Kay), makers of “smart” products (Proctor & Gamble was sued over Oral-B smart toothbrush and Subaru was sued over its DriverFocus system), and employers, such as H&M, that use fingerprinting technology for timekeeping and security purposes.

Given the steep penalties, BIPA cases often settle for millions of dollars – last year, social media giant TikTok settled a BIPA case for a whopping $92 million.

The U.S. Court of Appeals for the Seventh Circuit and the Illinois Supreme Court are currently considering the appeal of Cothron v. White Castle, and the important questions of what constitutes a “violation” for the purpose of calculating statutory damages. In that case, the plaintiff has claimed that her employer, defendant White Castle, repeatedly violated BIPA each week for nearly ten years when it collected and stored her fingerprint, which she scanned each week in order to access her weekly paystubs and sign various documents. The plaintiff has argued that while she had initially consented to the practice in 2007, that consent was invalidated upon BIPA’s 2008 passage and the new requirements therein, and sought enormous penalties based on each distinct weekly violation – even though the circumstances of each scan (and, of course, the fingerprint) were largely identical.

White Castle filed for judgment on the pleadings and among other things, argued that defining “violation” in this manner would lead to absurd results that the legislature could not have intended. White Castle appealed the trial court’s rejection denial of its motion and the Seventh Circuit held oral arguments on September 14, 2021. On December 20, 2021, the Seventh Circuit asked the Illinois Supreme Court to weigh in on the issue. The Illinois Supreme Court’s forthcoming decision will have an enormous impact on the scope of defendants’ liability in BIPA cases, as well as the statute’s attractiveness to plaintiffs.

Other Legislation Governing Biometric Data

While BIPA remains the most frequently litigated biometric privacy statute, it is certainly not the only one. Texas (in 2009) and Washington state (in 2017) passed biometric privacy laws placing similar disclosure and protection obligations upon businesses, but permit certain limited commercial uses of biometric data after obtaining consent. Neither includes a private cause of action.

But BIPA may not hold this unique position for long. In January, Kentucky legislators introduced HB 32, a BIPA copycat biometric privacy law with identical provisions, including its consent requirements, prohibitions on profits, private right of action, and damages amounts. While this measure has yet to clear the initial committee stages, if passed, it could usher a second wave of cases as numerous and burdensome as the BIPA litigation. Additionally, several other states including Maryland and New York are also considering biometric laws with private rights of action, but these measures are more permissive than BIPA and may be amended as they move past the early stages of consideration.

At least two cities have jumped into the biometric fray, and passed narrower measures authorizing private causes of action. New York City’s biometric privacy rule will take effect in July, and will require all food and drink establishments and places of entertainment in New York City that collect, retain, convert, store, or share “biometric identifier information” from customers to post clear, conspicuous notices near all customer entrances to their facilities. The NYC law provides a private right of action (but only after giving businesses notice and 30 days to cure) with damages ranging from $500 to $5,000 per violation, plus attorneys’ fees. Portland, Oregon’s ban on private businesses using facial recognition technology is already in effect and authorizes individuals to sue for $1,000 per day for each day of violation plus attorney’s fees.

Data Privacy Practices

In this ever-digitizing world, where concepts like “the metaverse” have become household conversation topics, it’s widely understood that information from nearly every transaction or piece of communication is stored by someone, somewhere. On the one hand, this offers omnilateral benefits – companies are able to receive comprehensive insights for maximizing business, and consumers receive products that are tailored to their interests. On the other hand, this proliferation of data collection has induced significant pushback.

Recently-enacted legislation aims to draw a line between acceptable uses of personal information and violations of privacy, and plaintiffs are using those laws to target companies in hopes of securing a nice payday. Understanding the facts and outcomes of recent lawsuits against companies that use technology like session replay, and biometrics, and the Retail Equation; and being familiar with the statutes at play in those cases, will go a long way toward helping executives and in-house counsel craft sensible data privacy practices.

Stephanie Sheridan is a partner at Steptoe & Johnson LLP, and the chair of the firm’s Retail Practice Group. Meegan Brooks and Surya Kundu are associates at Steptoe & Johnson LLP.